Talking Avatar

Are you looking for a way to give life to your avatar?

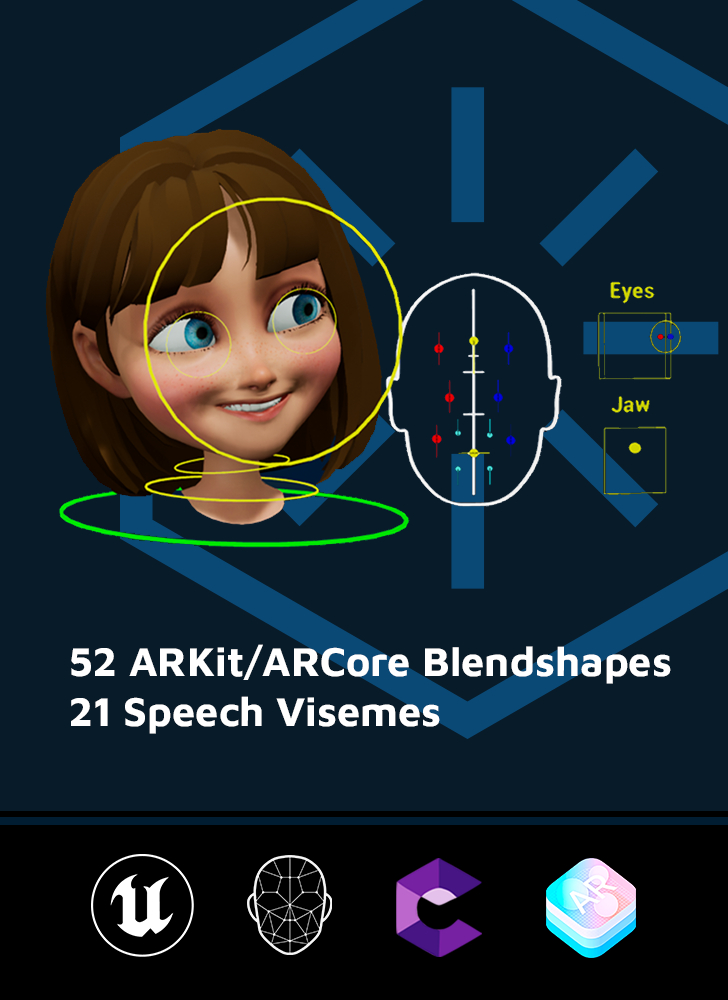

Polywink's rigging expertise will enable you to control your character's facial expressions down to the smallest detail with a facial rig of 73 blendshapes and 4 additional joints.

Power of Speech and Motion Capture

The 73 blendshapes include 21 visemes to focus on the your character's lips' movement so as to improve its realism. Make your avatar sound and look natural by using audio-to-speech or text-to-speech solutions (such as Amazon Polly, Google TTS...) combined with a lip sync technology (like Oculus LipSync).

Deliverable 3D Formats

Polywink offers three deliverable formats:

• FBX file: compatible with most software like Unity, Unreal Engine, Blender, and more

• Maya scene: receive a Maya scene up to Maya 2022 with a Faceboard for Keyframe animation AND an FBX file

• Unreal Engine project: receive a LiveLink set-up including the Oculus Lip Sync plugin to use voice-to-speech! And an animation faceboard in Unreal Engine 5

Free sample download

Not sure yet? Download a free sample to get an idea of the Talking Avatar service below!

What does this service do?

The Talking Avatar service gives life to your 3D head model by rigging it in such a way that it is compatible with most motion capture software and third party speech tools. By purchasing the Talking Avatar service, you will get:

• 52 ARKit/ARCore blendshapes

• 4 joints to control the top jaw, the lower jaw, the left eye and right eye

• 21 visemes compatible with lip sync solutions

What is the purpose of this service?

This service’s applications are numerous, it’s been used to help people create their game characters, AI Chatbots, virtual influencers, VTubers… the only limit is your imagination!

We’ve worked for 3D professionals as well as for 3D enthusiasts, whether they had cartoonish, realistic or photorealistic digital human models.

What’s the difference between the three deliverables?

• The Maya and Unreal Engine options include an animation faceboard, enabling you to easily animate your character’s face.

• The FBX format allows you to use your rigged model in any 3D software.

What are the requirements?

When you proceed to checkout and upload your model, you need to respect the following criteria:

• We can process up to 200 000 polygons

• Your 3D model must have a neutral pose (eyes open and mouth shut)

• You must include your model’s eyeballs and inner mouth (teeth, gum, tongue) as additional elements

• Model can be uploaded with full body, rig and skin

If you have any other additional features such as eyelashes, eyebrows, or beards, they should also be included.

After uploading your model, it is added to the queue and our team will check it. As soon as they approve it and your order is the 1st in the queue, it will be processed within 24 hours.

By default, we combine the different geometries in one single mesh to facilitate using the model in motion capture. If you would like to have the blendShapes for each geometry, we do that too, just let us know when you make your order!

Support and retakes are provided free of charge for 3 months after the delivery date.

Data sheet

- Blendshapes

- 73

- Compatibility

- IOS 11 ARKit 2 or later

- Compatibility

- Android 7 ARCore 1.7 or later

- Delivery Format

- .FBX or .MB + .FBX for the "Maya" option or UE5 project (folder containing .uproject file)

- Delivery

- 24h (when statut updated to "processing in progress")